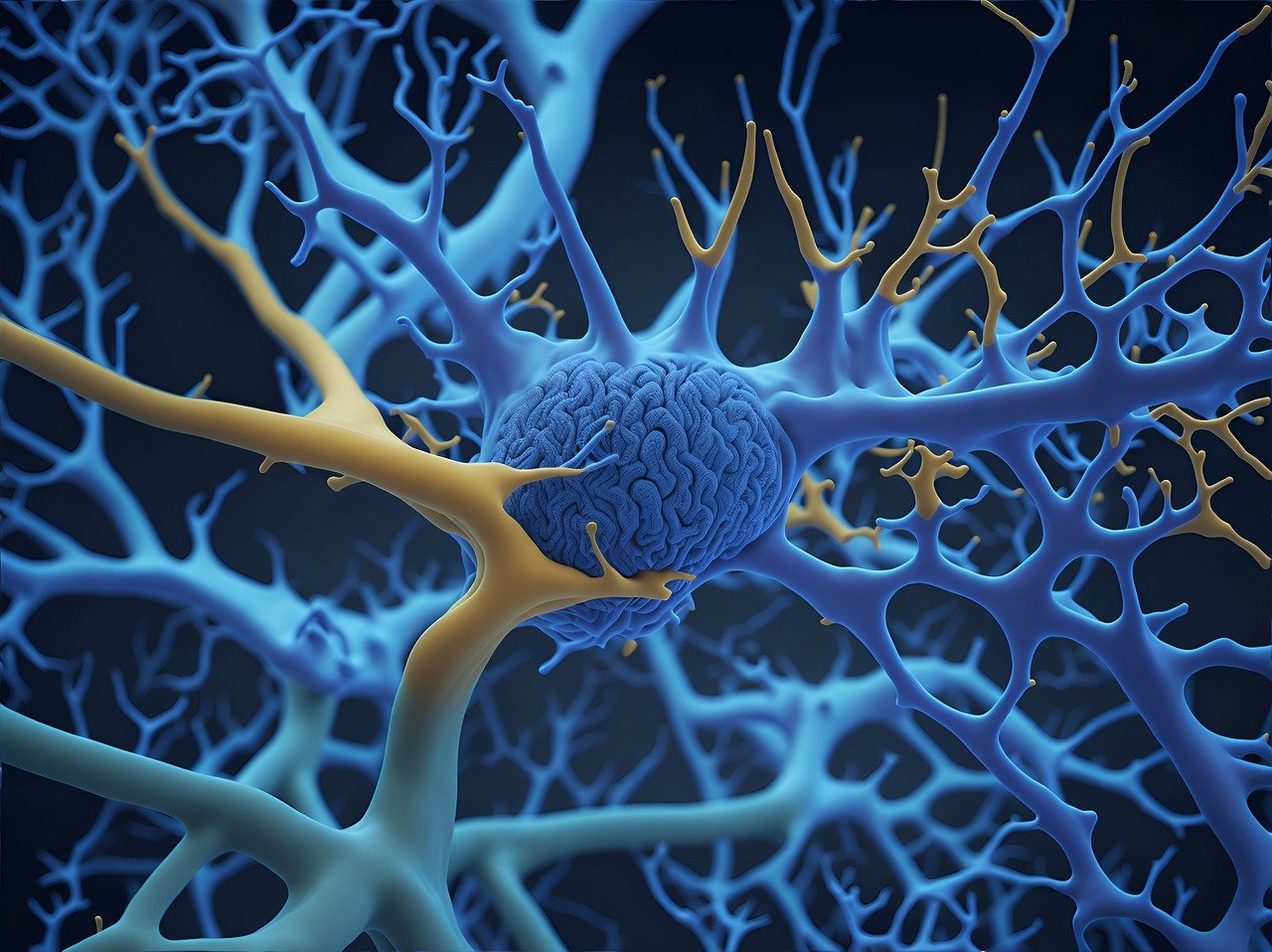

In recent years, advancements in artificial intelligence have brought us closer to understanding and replicating the intricate functions of the human brain. Central to this progress are artificial neurons, a pivotal component of neural networks that aim to mimic human brain function. This article delves into the fascinating world of artificial neurons, exploring how they emulate brain processes and contribute to the field of brain-inspired technology. By examining the intricacies of these synthetic equivalents, we gain insights into potential applications in cognitive computing, neuromorphic computing, and beyond, ultimately furthering our quest to replicate human intelligence through machine learning and deep learning models.

1. Understanding Artificial Neurons: The Building Blocks of Brain-Inspired AI

Artificial neurons, often referred to as units or nodes, are fundamental components of artificial neural networks (ANNs). They play a pivotal role in brain-inspired AI by emulating the activity of biological neurons found in the human brain.

Structure of Artificial Neurons

An artificial neuron consists of several essential parts designed to replicate the functionality of a biological neuron:

- Inputs: Just like dendrites in biological neurons receive signals, artificial neurons receive multiple input signals. Each input can have an associated weight, representing its importance.

inputs = [0.5, 0.3, 0.2] weights = [0.4, 0.7, 0.1] - Weights and Bias: Weights are numeric values updated during training to better reflect the importance of each input. The bias is an extra parameter added to the linear combination of inputs to allow flexibility in the output.

bias = 0.1 weighted_sum = sum(i * w for i, w in zip(inputs, weights)) + bias - Activation Function: After computing the weighted sum, the artificial neuron passes this result through a nonlinear activation function, similar to how a biological neuron fires an action potential. Common activation functions include sigmoid, tanh, and ReLU.

import math # Sigmoid Activation Function def sigmoid(x): return 1 / (1 + math.exp(-x)) activated_output = sigmoid(weighted_sum)

Functionality and Information Processing

- Information Aggregation: Each artificial neuron aggregates information from preceding layers, emphasizing how inputs are combined linearly.

- Activation and Transformation: The use of nonlinear activation functions enables the network to model complex patterns and phenomena, which simple linear models cannot capture.

- Propagation: The activated signals are then passed through the network layers, mimicking the transmission of biological signals through synaptic connections.

Example of a Simple Artificial Neuron

Here’s a simple demonstration in Python to illustrate an artificial neuron:

import numpy as np

# Define the activation function

def relu(x):

return max(0, x)

# Inputs and corresponding weights

inputs = np.array([1.5, -2.0, 3.0])

weights = np.array([0.7, 0.2, -0.4])

bias = 1.0

# Calculate the weighted sum

weighted_sum = np.dot(inputs, weights) + bias

# Apply the activation function

output = relu(weighted_sum)

print(f"Output of the artificial neuron is: {output}")

Training Artificial Neurons

During training, artificial neurons learn by adjusting their weights and biases. This is typically done through a technique called backpropagation combined with an optimization algorithm like stochastic gradient descent (SGD).

In backpropagation, the error from the output is propagated backward through the network. This indicates how much each neuron contributed to the error, subsequently updating weights to reduce future errors.

The use of these principles makes artificial neurons incredibly powerful for modeling cognitive functions, simulating various tasks similar to a human brain, and evolving through learning, just as how our brains adapt over time.

Further Reading

For further details on the structure and functioning of artificial neurons, refer to the official PyTorch documentation and TensorFlow’s guide on neural networks. These resources provide comprehensive insights into neural network modeling, building blocks, and practical implementations.

2. The Science Behind Neural Networks and Human Brain Simulation

Artificial neural networks (ANNs) attempt to mimic the human brain by leveraging the principles of interconnected artificial neurons. These ANNs typically consist of layers of nodes, often described as neurons, which are interconnected through weighted edges. The core idea is to simulate the complexities of human brain activity, particularly processes related to learning and recognition.

In the human brain, neurons communicate via synapses, which we can compare to the weighted connections in ANNs. These synapses adjust their strength based on learning experiences, similar to how weights are adjusted in a process called backpropagation in neural networks. Backpropagation, as detailed in the original paper by Rumelhart, Hinton, and Williams, is a supervised learning algorithm that helps optimize the weights to reduce the prediction error of the network.

The fundamental unit of the neural network, the artificial neuron, is mathematically represented. Typically, the neuron processes input signals, applies weights, and passes the result through an activation function. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent), each with its advantages in handling non-linear data:

import numpy as np

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def relu(x):

return np.maximum(0, x)

def tanh(x):

return np.tanh(x)

Activation functions introduce non-linearity, crucial for handling complex relationships within data, paralleling the complex, non-linear processing power of biological neurons.

Additionally, the structure of neural networks—comprising input, hidden, and output layers—parallels different stages of information processing within the brain. The input layer receives raw data, akin to sensory information. Hidden layers process and transform this data, comparable to cognitive processing regions in the brain, and the output layer produces the final result, akin to motor or verbal responses.

Researchers in AI and neuroscience are keen on evolving these models to better replicate the functioning of the human brain. For example, convolutional neural networks (CNNs), inspired by the visual cortex, specialize in image and spatial data by employing filters that mimic the receptive fields of visual neurons. Similarly, recurrent neural networks (RNNs), including variants like LSTMs and GRUs, are designed to handle sequential data and are inspired by the brain’s memory functions.

While artificial neural networks strive to mimic the human brain, simulating the full complexity of biological neural networks remains a challenge. Advancements in brain-inspired AI seek to close this gap by integrating more intricate models and learning methodologies, pushing the boundaries in both AI capabilities and our understanding of the brain.

3. Deep Learning Models: Advancements in Mimicking Human Brain Functionality

Deep learning models have revolutionized the way artificial intelligence mimics human brain functionality. At their core, these models consist of multiple layers of interconnected artificial neurons designed to learn patterns and representations from vast amounts of data. This learning process is inspired by the neural networks in the human brain, albeit much simpler and more structured.

1. Evolution from Shallow to Deep Networks

Initially, machine learning models relied on shallow architectures, consisting of one or two hidden layers (e.g., logistic regression and basic neural networks). However, with advancements in computational power and data availability, deep learning models with many layers (often called deep neural networks or DNNs) became feasible. These deep architectures, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have shown tremendous success in tasks involving image and speech recognition, tasks typically handled by the human brain with great efficiency.

2. Convolutional Neural Networks (CNNs) for Visual Processing

CNNs are a type of deep learning model particularly effective for image-related tasks. They feature convolutional layers that automatically and adaptively learn spatial hierarchies of features from input images. This is somewhat akin to the way the visual cortex in the human brain processes visual information. Key operations in CNNs include convolution, pooling, and fully connected layers, each contributing to the model’s ability to focus on different details and patterns.

# Sample code to define a simple CNN using TensorFlow

import tensorflow as tf

from tensorflow.keras import layers, models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

If you want to learn more about CNNs, you can refer to the TensorFlow documentation.

3. Recurrent Neural Networks (RNNs) for Sequence Learning

RNNs are designed to handle sequential data, making them well-suited for tasks involving time-series data or natural language processing. Unlike traditional neural networks, RNNs have connections that form directed cycles, allowing them to maintain a ‘memory’ of previous inputs. This mirrors the brain’s ability to retain information over time. Long Short-Term Memory (LSTM) units and Gated Recurrent Units (GRUs) are popular RNN variants that mitigate issues such as the vanishing gradient problem.

# Sample code to define a simple RNN using Keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import SimpleRNN, Dense

model = Sequential()

model.add(SimpleRNN(128, input_shape=(100, 1), return_sequences=True))

model.add(SimpleRNN(64, return_sequences=False))

model.add(Dense(10, activation='softmax'))

For further details on RNNs, the Keras documentation provides comprehensive guides and examples.

4. Generative Adversarial Networks (GANs)

A more recent advancement in deep learning is the development of GANs. These consist of two neural networks, the generator and the discriminator, which contest with each other in a zero-sum game framework. The generator creates data that mimics real data, while the discriminator evaluates its authenticity. This generative modeling technique has been used to produce images, music, and even realistic human faces, pushing the boundaries of how closely AI can mimic creative aspects of human brain functionality.

# Simplified overview of GAN structure

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LeakyReLU

# Generator

generator = Sequential()

generator.add(Dense(256, input_dim=100))

generator.add(LeakyReLU(alpha=0.2))

generator.add(Dense(512))

generator.add(LeakyReLU(alpha=0.2))

generator.add(Dense(1024))

generator.add(LeakyReLU(alpha=0.2))

generator.add(Dense(784, activation='tanh'))

# Discriminator

discriminator = Sequential()

discriminator.add(Dense(1024, input_dim=784))

discriminator.add(LeakyReLU(alpha=0.2))

discriminator.add(Dense(512))

discriminator.add(LeakyReLU(alpha=0.2))

discriminator.add(Dense(256))

discriminator.add(LeakyReLU(alpha=0.2))

discriminator.add(Dense(1, activation='sigmoid'))

The TensorFlow GAN tutorial offers more in-depth insights and practical examples.

These examples reflect just a few of the sophisticated models underpinned by deep learning that contribute to the remarkable ability of artificial intelligence to mimic human brain functions. The continuous advancements in deep learning architectures, optimization techniques, and computational resources promise even more profound breakthroughs in mimicking the cognitive processes underlying human intelligence.

4. The Role of Neuromorphic Computing in Brain-Like Computing Systems

Neuromorphic computing is a revolutionary approach in the development of brain-like computing systems. It focuses on designing hardware that mimics the neural structure and functioning of the human brain more closely than traditional computing architectures. The objective is to create systems that can perform complex tasks such as pattern recognition, learning, and decision-making more efficiently and with lower power consumption compared to conventional architectures.

The Essence of Neuromorphic Computing:

Neuromorphic computing leverages principles from the neuroscience domain, specifically the intricacies of neural networks in the human brain. Traditional computing systems rely on the von Neumann architecture, which separates memory and processing units, leading to bottlenecks during data transfer. In contrast, neuromorphic chips integrate memory and processing elements, emulating the distributed nature of neural processing in the brain. This integration minimizes latency and enhances computational efficiency.

Key Components of Neuromorphic Systems:

- Silicon Neurons:

Neuromorphic systems utilize silicon-based neurons that operate similarly to biological neurons. These artificial neurons can perform tasks such as spiking, integrating synaptic inputs, and self-regulating based on activity levels. - Synapses:

Just as biological neurons communicate via synapses, neuromorphic systems employ artificial synapses that manage the transmission of electrical signals between silicon neurons. These synapses can dynamically adjust their strength based on the learning algorithms, mimicking synaptic plasticity observed in the human brain. - Spiking Neural Networks (SNNs):

Traditional neural networks use continuous values for computations, while Spiking Neural Networks (SNNs) – an essential part of neuromorphic computing – rely on discrete event-based processing. In SNNs, information is transmitted in the form of spikes or action potentials, akin to how neurons communicate in the brain. This leads to lower energy consumption and more biologically plausible models.Example Python code for a simple spiking neural network using NEST simulator:

import nest # Create neurons neurons = nest.Create("iaf_psc_alpha", 100) # Create a poisson generator with a rate of 100 spikes/s poisson_gen = nest.Create("poisson_generator", 1, {"rate": 100.0}) # Create a multimeter to record membrane potentials multimeter = nest.Create("multimeter", 1) nest.SetStatus(multimeter, {"withtime": True, "record_from": ["V_m"]}) # Create a spike detector to record spikes spikedetector = nest.Create("spike_detector", 1) # Connect the poisson generator to the neurons nest.Connect(poisson_gen, neurons, syn_spec={"weight": 100.0}) # Connect the multimeter to the neurons nest.Connect(multimeter, neurons) # Connect the neurons to the spike detector nest.Connect(neurons, spikedetector) # Simulate for 1000 ms nest.Simulate(1000.0)

Leading Edge Technologies and Companies:

Prominent tech companies are pioneering the development of neuromorphic chips. For instance, Intel’s Loihi and IBM’s TrueNorth chips represent significant strides in neuromorphic computing. These chips are designed to support advanced machine learning and AI applications by mimicking the parallel processing capabilities of the human brain.

Potential and Challenges:

Neuromorphic computing holds the promise of creating AI systems that are more adaptive, energy-efficient, and capable of real-time learning. However, significant challenges remain, such as developing scalable hardware architectures and creating effective software frameworks for programming and training neuromorphic systems.

For further details, the official documentation of these neuromorphic technologies can be explored:

By harnessing the fundamentals of brain-like computing, neuromorphic systems offer a promising pathway toward achieving more sophisticated and efficient AI technologies that truly mimic human brain functionality.

5. Cognitive Computing: Bridging the Gap Between AI and Neuroscience

Cognitive computing sits at the intersection of artificial intelligence (AI) and neuroscience, designed to emulate the human brain’s decision-making and learning capabilities. Representing an advanced form of artificial intelligence, cognitive computing systems leverage neural network algorithms to model complex human thought processes and sensory perceptions.

One of the primary distinctions of cognitive computing is its ability to process and analyze vast amounts of unstructured data, similar to how the human brain interprets sensory information. Systems like IBM’s Watson have demonstrated this proficiency, using algorithms to parse language, images, audio, and other forms of data to provide nuanced and context-aware responses. Cognitive computing systems comprise several layers of artificial neurons, each layer specializing in different facets of understanding, from natural language processing to visual recognition and beyond.

Moreover, cognitive computing aims to go beyond mere data analysis. It seeks to mimic human brain strategies in problem-solving and adaptive learning by constantly refining its models based on new data inputs. This is achieved through techniques such as reinforcement learning, where the AI system learns and adapts through trial-and-error feedback, much like how humans learn from their environment.

To provide concrete examples, consider a cognitive computing system designed for healthcare. These systems analyze patient records, medical literature, and real-time monitoring data to generate diagnostic suggestions and personalized treatment plans, a task traditionally reliant on human experts.

Cognitive computing frameworks often incorporate components from both AI and neuroscience research. For instance, neuromorphic computing elements are sometimes integrated to enhance the efficiency and efficacy of these systems. Neuromorphic chips, designed to emulate the brain’s neural architecture, can facilitate faster and more efficient data processing, significantly improving the cognitive system’s response times and learning capabilities.

There are multiple alternatives and varying approaches to building cognitive computing systems. Major tech companies like IBM and Google have invested heavily in developing these systems, but there are also open-source alternatives available for experimentation and development. For instance, the OpenCog project (https://opencog.org/) is an open-source platform aimed at creating cognitive models with the potential to achieve general AI, drawing from both AI and neuroscience frameworks.

The best approaches to developing cognitive computing systems typically emphasize integrating multidisciplinary techniques from AI, machine learning, and neuroscience. Using frameworks like TensorFlow (https://www.tensorflow.org/) or PyTorch (https://pytorch.org/) to design and train neural networks, developers can create models that simulate cognitive functions.

An example in code using TensorFlow to build a simple neural network for cognitive tasks might look like this:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# Define a simple feedforward neural network

model = keras.Sequential([

layers.Dense(512, activation='relu', input_shape=(input_features,)),

layers.Dropout(0.2),

layers.Dense(256, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Training the model

model.fit(train_data, train_labels, epochs=5, validation_split=0.2)

This example showcases a fundamental cognitive system component that could be expanded and sophisticated to perform more complex tasks like natural language understanding or contextual decision-making.

By leveraging the principles underlying both AI and neuroscience, cognitive computing makes strides towards creating a new generation of brain-inspired technology capable of intelligent, adaptive, and contextually aware systems.

6. Practical Applications of Brain-Inspired Technology in Modern AI

Brain-inspired technology has revolutionized modern AI by integrating principles derived from the human brain to develop more efficient, adaptive, and intelligent systems. Here are some of the key practical applications:

1. Autonomous Vehicles:

Self-driving cars leverage artificial neural networks to process vast amounts of sensor data in real-time, allowing them to navigate intricate environments, detect obstacles, and make split-second decisions. For instance, Tesla’s Autopilot system uses deep learning models trained on millions of miles of driving data, imitating the decision-making processes of human drivers. More details about this can be found in their documentation.

2. Healthcare and Medical Diagnostics:

AI systems, such as those developed by IBM Watson Health, use cognitive computing to analyze medical records and assist in diagnosing diseases. Tools like these can perform image recognition tasks to identify anomalies in medical imaging, such as MRIs and CT scans, by mimicking human radiologists but with increased speed and accuracy. Info on IBM’s cognitive computing in healthcare is accessible in their documentation.

3. Natural Language Processing (NLP):

Artificial neural networks power voice assistants like Amazon Alexa and Google Assistant, enabling them to understand and process human speech. These systems utilize deep learning models for tasks such as speech recognition, sentiment analysis, and language translation, closely replicating human cognitive processes involved in language comprehension. You can explore this more in Google’s NLP overview.

4. Financial Services:

In the finance industry, AI algorithms are used for fraud detection, risk assessment, and automated trading. Neural networks analyze transaction patterns and learn to spot irregularities or potential threats. Additionally, AI-powered robo-advisors offer personalized financial advice by simulating human financial planners’ behaviors and strategies. For detailed information, consult the AI in finance guide.

5. Entertainment and Media:

Streaming services like Netflix and Spotify use recommendation systems based on neural network algorithms to suggest content to users. These systems analyze user preferences and behavior to deliver personalized recommendations by mimicking the associative patterns of the human brain. Netflix’s recommendation system is documented in their teaching resources.

6. Robotics:

AI-driven robots utilize brain-inspired technology to perform complex tasks in dynamic environments. For example, Boston Dynamics’ robots incorporate machine learning techniques that enable them to adapt to new situations, learn from their surroundings, and perform actions with human-like dexterity and precision. Learn more about their technology in the Boston Dynamics overview.

By integrating these advanced technologies rooted in the structure and function of human neural networks, AI applications across diverse fields continue to benefit from the enhanced capabilities provided by brain-inspired methods. These applications demonstrate the transformative potential of artificial intelligence as it draws closer to mimicking human cognitive processes.